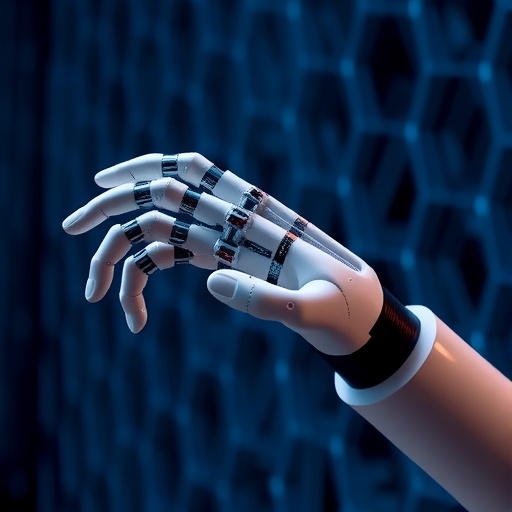

Advancements in brain-computer interface (BCI) technology are paving the way for remarkable innovations in assistive robotics, particularly in the realm of prosthetic devices. The latest research conducted by a team at Carnegie Mellon University, led by professor Bin He, reveals a groundbreaking achievement in noninvasive BCIs, allowing for real-time control of robotic hands at the individual finger level. This represents a significant leap forward in the application of BCIs, which have the capacity to revolutionize the everyday lives of individuals with disabilities.

The integration of BCIs with robotic systems presents an unprecedented opportunity to enhance quality of life for the more than one billion people globally who live with some form of disability. The research team’s efforts focused on electroencephalography (EEG)-based interfaces that operate without the need for surgical implantation. This noninvasive approach eliminates many risks associated with traditional invasive BCIs, thereby expanding potential applications to a broader demographic.

Professor Bin He, who has dedicated over two decades to BCI research, underscored the importance of this new contribution. “Improving hand function is a top priority for both impaired and able-bodied individuals,” he remarked. This sentiment is increasingly relevant in the context of current healthcare challenges, where the demand for innovative solutions to support functional independence continues to grow.

.adsslot_AYHCu8taSO{ width:728px !important; height:90px !important; }

@media (max-width:1199px) { .adsslot_AYHCu8taSO{ width:468px !important; height:60px !important; } }

@media (max-width:767px) { .adsslot_AYHCu8taSO{ width:320px !important; height:50px !important; } }

ADVERTISEMENT

The profound implications of controlling robotic hands at the finger level cannot be overstated. Designed to mimic the dexterity humans rely on for daily tasks, the robotic hand represents a turning point for rehabilitation and independence in individuals with motor deficits. The research meticulously details how the team’s novel deep-learning decoding methodology successfully translates brain signals into precise robotic movements. The focus on individual finger control is especially compelling; previous attempts at BCI-powered prosthetic control often fell short in achieving fine motor skills critical for everyday tasks such as typing.

Central to the advancement of this technology is the innovative use of noninvasive EEG signals. These signals capture neural activity associated with motor intention, allowing the system not just to interpret basic gestures but to discern complex movements commanded by the user’s thoughts. Through continuous decoding processes, subjects engaged with the robotic hand to successfully perform two- and three-finger tasks merely by envisioning the movements, showcasing the immense capability of the BCI.

The study published in the esteemed journal Nature Communications emphasizes that, while real-time command over individual fingers has been elusive, the new findings indicate a pathway toward achieving increasingly sophisticated control over robotic assistance. Achieving this level of functionality not only demonstrates technological innovation but also deepens the understanding of brain signaling, opening up doors for future research avenues and applications.

One of the pivotal aspects of the study is the application of advanced machine learning techniques, which have become fundamental in transforming unrefined data into actionable outputs. The neural network developed by the team enhances real-time processing speeds and accuracy, enabling seamless interactions between human intention and robotic response. This marks a significant advancement in the integration of artificial intelligence with neurotechnology, showcasing the potential for creating more responsive and adaptive robotic systems in the future.

The technological implications extend beyond immediate applications in assistive devices. The ability to decode intricate finger movements in real-time has potential effects on a wide range of fields, from rehabilitation practices for stroke patients to conceptualizing next-generation prosthetics that respond intuitively to thoughts and neural commands. Such advancements could fundamentally change how we perceive assistive technologies, shifting from simple replacements for lost functions to intuitive extensions of one’s body.

Looking ahead, the research team aims to refine their technology further, aspiring to enhance user experience by focusing on tasks that require even greater levels of dexterity. Potential applications may involve enabling users to type or perform intricate manual tasks seamlessly and efficiently. This level of interaction may resemble the intuitive control of natural limbs, pointing to a future where assistive robotic devices fully integrate into people’s lives.

Moreover, the transformative potential of this research underscores the vital role of interdisciplinary collaborations in driving scientific innovation. By merging fields such as neuroscience, engineering, and artificial intelligence, researchers can create robust solutions that yield both practical and clinical impacts. The study’s findings serve as a testament to the power of collaboration in addressing some of society’s most pressing challenges.

As we stand on the brink of what could be a new era in neuroprosthetics, the scientific and medical communities are compelled to consider the ethics surrounding such technologies. The duality of technology’s potential to enhance quality of life against the backdrop of accessibility and equity raises essential discussions among stakeholders. Ensuring that these advancements are available equitably across diverse populations will require concerted efforts from researchers, policymakers, and practitioners alike.

In summary, the significant success achieved by Bin He’s research team at Carnegie Mellon ushers in a promising horizon for noninvasive brain-computer interface technologies. Control at the finger level represents not only a technical achievement but also the initiation of a broader conversation about the future of robotics and neural interfaces, their applications, and their implications for society. As strides are made in deciphering the brain’s complexities, we may soon witness a world where individuals can leverage technology to restore functionality, enhance interaction, and reclaim autonomy in their lives.

Subject of Research: Noninvasive Brain-Computer Interface (BCI) for Robotic Hand Control

Article Title: EEG-based Brain-Computer Interface Enables Real-time Robotic Hand Control at Individual Finger Level

News Publication Date: 30-Jun-2025

Web References: Nature Communications Article

References: Not Applicable

Image Credits: Not Applicable

Keywords

техенты библи.

Tags: assistive robotics for disabilitiesbrain-computer interface advancementsCarnegie Mellon University BCI researchelectroencephalography-based interfacesenhancing quality of life with BCIsfinger-level control in roboticsfuture of assistive technology solutionsimproving hand function in healthcarenoninvasive BCI technologyovercoming disability challenges with technologyprosthetic device functionalityrobotic hand control innovation