In a groundbreaking development poised to revolutionize breast cancer diagnostics, researchers have unveiled an interpretable artificial intelligence system that significantly curtails false-positive readings in MRI-based breast lesion detection. The innovation addresses a long-standing challenge in radiological practice: the balancing act between sensitivity and specificity, where efforts to avoid missed diagnoses often lead to an overabundance of false alarms. This state-of-the-art AI model not only enhances diagnostic accuracy but also introduces a layer of transparency previously missing from the black-box nature of many machine learning tools, offering clinicians nuanced insights into high-risk lesion stratification.

Breast magnetic resonance imaging (MRI) has traditionally served as a critical tool in detecting and characterizing breast lesions, especially in patients with dense breast tissue or those deemed at high cancer risk. However, the modality’s high sensitivity often comes at the cost of specificity, resulting in many benign lesions being flagged as suspicious, prompting unnecessary biopsies and patient anxiety. The new interpretable AI system developed by Liang, Wei, Dai, and colleagues promises to mitigate these issues by leveraging advanced computational techniques to stratify lesion risk more precisely, thereby empowering clinicians to make more informed decisions.

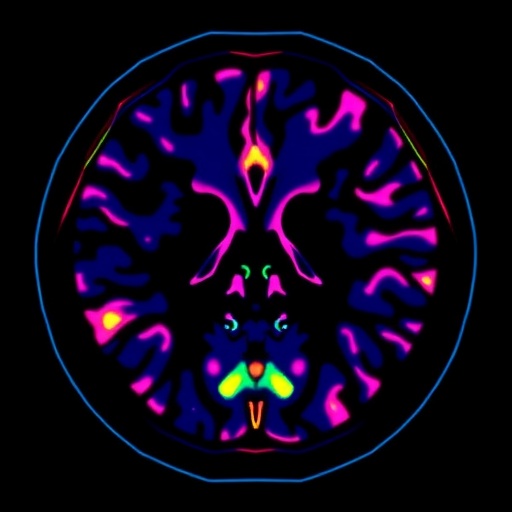

At the heart of this AI system lies a novel algorithmic framework that emphasizes interpretability — the ability to understand the rationale behind a machine’s decision. Unlike conventional deep learning models that process imaging data through complex, often inscrutable layers, this system integrates a multi-scale feature extraction mechanism. It assesses lesion morphology, texture patterns, and dynamic contrast enhancement kinetics in a manner aligned with radiological principles. This alignment enables the system to produce diagnostic outputs accompanied by explanatory data, illuminating which specific imaging characteristics influenced the risk classification.

The model was trained and validated on an extensive dataset comprising thousands of breast MRI scans collected from multiple institutions, encompassing a broad spectrum of lesion types and patient demographics. This diverse data foundation was critical in ensuring the generalizability and robustness of the AI system across variable clinical contexts. What sets this approach apart is not merely its performance metrics but its capacity to reduce false positives without compromising sensitivity — a delicate equilibrium that holds profound clinical implications.

In trials, the AI demonstrated a remarkable capability to discern high-risk lesions from low-risk or benign abnormalities with exceptional accuracy. By stratifying lesions into risk categories informed by comprehensive imaging biomarkers, the system assists radiologists in prioritizing cases that warrant immediate intervention while advising caution or routine follow-up for others. This stratification has the potential to alleviate the physical, emotional, and financial burdens inflicted upon patients subjected to unwarranted biopsies.

An intriguing aspect of the research is the incorporation of explainability tools that translate the AI’s internal reasoning into human-readable formats. For instance, heatmaps generated by the model visually highlight regions within the lesion that are most predictive of malignancy, thereby directing the radiologist’s attention to critical imaging features such as irregular borders or heterogeneous enhancement patterns. This symbiotic interaction between machine and clinician fosters trust and facilitates the integration of AI assistance into everyday diagnostic workflows.

Beyond its diagnostic prowess, the system embodies a paradigm shift toward responsible AI implementation in medicine. By prioritizing interpretability, the researchers have addressed ethical and regulatory concerns associated with automated decision-making in healthcare. Clinicians, patients, and policymakers can thus engage with AI recommendations critically, fostering acceptance and enabling more transparent patient communication.

The implications of this AI advancement extend beyond breast imaging. The methodology of combining interpretability with clinical-grade performance could serve as a blueprint for other medical imaging domains where false-positive rates are high. Radiological disciplines such as prostate MRI, lung nodule detection, and brain tumor classification stand to benefit from similar AI-enhanced stratification techniques, promising a broader impact on diagnostic precision and patient care standards.

Furthermore, the system’s development leveraged state-of-the-art computational infrastructure, employing convolutional neural networks fine-tuned with attention mechanisms that mimic human visual assessment strategies. The integration of temporal dynamics, accounting for contrast agent wash-in and wash-out patterns over sequential MRI phases, enriches the dataset features and bolsters prediction accuracy. These technical innovations contribute to the nuanced understanding the AI imparts, enabling it to mimic, and at times surpass, expert radiologists’ diagnostic capabilities.

Linguistically and clinically intuitive outputs distinguish this AI from predecessors. The system generates concise interpretive summaries alongside probabilistic scores, indicating not just whether a lesion is likely malignant but also detailing the factors influencing risk stratification, such as lesion size, shape irregularities, and internal enhancement heterogeneity. This granularity supports personalized clinical decision-making, potentially guiding the choice between biopsy, enhanced surveillance, or therapeutic intervention.

The study also underscores the potential for continuous learning frameworks, wherein the AI model refines its algorithms through feedback loops from newly acquired data and clinician input. Such adaptability ensures that the system remains current with evolving imaging protocols, lesion phenotypes, and population health trends. This dynamic evolution marks a significant advance over static diagnostic algorithms, promising sustained efficacy over time.

Ethical considerations were central throughout the AI’s design and validation phases. Meticulous efforts ensured dataset diversity to prevent algorithmic biases, safeguarding equitable diagnostic performance across different patient groups irrespective of age, ethnicity, or breast density categories. Transparency in data provenance and model decision-making further buttresses the system’s alignment with clinical governance standards.

The AI’s deployment scenario envisions an augmented diagnostic environment where radiologists interact with the system in real-time, harnessing its analytical power while exercising their nuanced clinical judgment. Such synergy is anticipated to streamline imaging workflows, reduce diagnostic turnaround times, and enhance patient reassurance through more precise communication of findings and associated risks.

While the AI system is a powerful tool, researchers are careful to emphasize that it complements rather than replaces human expertise. Its primary role lies in supporting radiologists by flagging subtle imaging cues that may escape human perception or by providing a second opinion in equivocal cases. The interpretability focus ensures that clinicians remain in control, with the AI serving as an intelligent assistant rather than an autonomous decision-maker.

Future research directions include expanding validation across multinational cohorts, integrating multimodal imaging data such as mammography and ultrasound, and exploring the combination of imaging with genomic and clinical data to create even more comprehensive risk models. Such integrative approaches could usher in a new era of precision oncology, where diagnosis, prognosis, and treatment planning are seamlessly informed by AI-augmented imaging analytics.

This landmark achievement stands at the intersection of machine learning innovation and clinical necessity. As breast cancer remains a leading cause of mortality worldwide, reducing diagnostic inaccuracies through such sophisticated AI tools holds quintessential promise. By diminishing false positives, patients endure fewer invasive procedures, healthcare systems optimize resource allocation, and clinicians can redirect focus toward personalized patient care.

In conclusion, the interpretable AI system introduced by Liang and colleagues represents a monumental leap forward in medical imaging diagnostics. It embodies the convergence of transparency, accuracy, and clinical applicability—qualities essential for widespread adoption. As this technology matures and integrates into clinical practice, it heralds a new dawn where artificial intelligence empowers radiologists, clinicians, and patients alike to navigate the complexities of breast cancer diagnosis with unprecedented confidence and clarity.

Subject of Research: Interpretable artificial intelligence system for breast MRI diagnosis to reduce false positives by stratifying high-risk breast lesions.

Article Title: An interpretable AI system reduces false-positive MRI diagnoses by stratifying high-risk breast lesions.

Article References:

Liang, Y., Wei, Z., Dai, Y. et al. An interpretable AI system reduces false-positive MRI diagnoses by stratifying high-risk breast lesions. Nat Commun (2026). https://doi.org/10.1038/s41467-026-69212-7

Image Credits: AI Generated

Tags: AI innovations in healthcarebalancing sensitivity and specificity in diagnosticsbreast cancer diagnosticsbreast MRI for dense tissuecomputational techniques in medical imagingenhancing diagnostic accuracy in radiologyhigh-risk lesion stratificationimproving patient outcomes in breast cancerinterpretable artificial intelligenceMRI-based lesion detectionreducing false positives in breast imagingtransparent machine learning tools