In recent years, the advancement of agricultural technology has accelerated dramatically, with autonomous tractors, drones, and various smart devices becoming increasingly prevalent across modern farmlands. This digital transformation promises heightened productivity and efficiency; however, it also introduces new safety challenges, most notably the risk of collisions between mechanized equipment and human workers. As farms grow larger and more automated, the imperative for real-time, accurate pedestrian detection systems capable of functioning reliably under harsh agricultural conditions has become more urgent than ever.

Detecting pedestrians in farmland environments is a notorious challenge for computer vision systems. Unlike urban scenarios where lighting and occlusion are relatively controlled, agricultural fields exhibit highly variable illumination caused by fluctuating weather conditions, shadows from vegetation, and time-of-day effects. Moreover, targets such as workers can often be densely clustered, partially obscured by machinery, plants, or terrain features, creating complex visual patterns that confound traditional detection algorithms. These difficulties are magnified further when deployed on edge devices with limited computational power, which commonly use low-resolution cameras to reduce costs and enhance deployment feasibility.

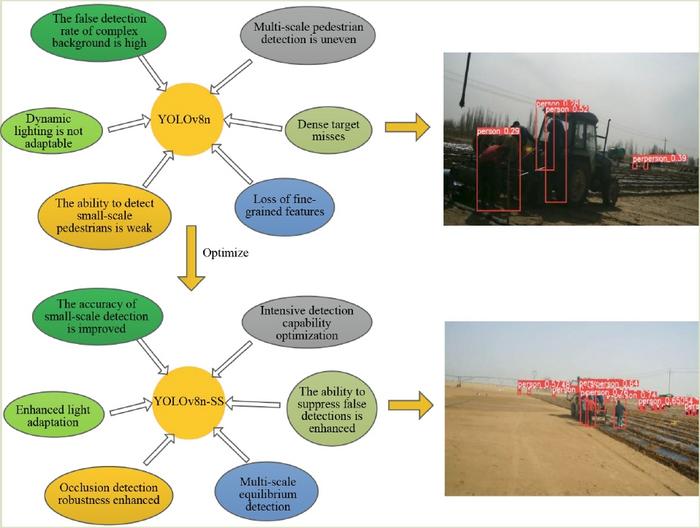

Confronting these obstacles, a pioneering research team led by Associate Professor Yanfei Li at Hunan Agricultural University has devised a significant breakthrough for pedestrian detection tailored to agricultural contexts. Their innovative algorithm, reported in the journal Frontiers of Agricultural Science and Engineering, advances the widely acclaimed YOLOv8n architecture—a real-time object detection framework known for its speed and adaptability. By specifically adapting YOLOv8n to address the environmental complexities of farmland, their approach, dubbed YOLOv8n-SS, sets a new benchmark for accuracy and efficiency in this specialized domain (DOI: 10.15302/J-FASE-2025613).

.adsslot_ewY38KLjvJ{width:728px !important;height:90px !important;}

@media(max-width:1199px){ .adsslot_ewY38KLjvJ{width:468px !important;height:60px !important;}

}

@media(max-width:767px){ .adsslot_ewY38KLjvJ{width:320px !important;height:50px !important;}

}

ADVERTISEMENT

A critical insight in this work is the recognition that convolutional neural networks (CNNs), including foundational YOLO versions, often lose crucial fine-grained details when processing low-resolution images typical of agricultural devices. This loss is mainly due to conventional strided convolutions and pooling operations, which compress spatial information, inadvertently discarding subtle cues essential for detecting small or partially visible objects. To mitigate this, the team introduced a novel Spatial Pyramid Dilated Convolution (SPD-Conv) module. SPD-Conv reorganizes spatial data into depth-wise feature representations, allowing the network to preserve finer details during feature extraction. This transformation enriches the model’s perception of small targets, such as pedestrians at a distance or those partly hidden behind crops.

Complementing the SPD-Conv module, the researchers integrated a Selective Kernel (SK) attention mechanism. Unlike static convolutional layers that apply fixed receptive fields, SK attention dynamically chooses from multiple kernel sizes during feature processing, enabling the network to adaptively focus on the most informative scales in the scene. This flexibility proves vitally important in farmland scenarios where the size and appearance of targets can vary widely. By emphasizing relevant spatial features in crowded or occluded environments, the SK mechanism substantially enhances the model’s localization precision, reducing false positives and missed detections.

To evaluate the effectiveness of YOLOv8n-SS, the team conducted extensive experiments on the public CrowdHuman dataset, a challenging benchmark for pedestrian detection characterized by crowded scenes and heavy occlusions. The improved model achieved a striking 7.2% increase in mean Average Precision (mAP) over the baseline YOLOv8n, illustrating its enhanced capability to identify individuals in complex settings. Furthermore, real-world field tests in actual farmland environments revealed a 7.6% mAP improvement, confirming the model’s practical applicability and robustness under diverse lighting and occlusion conditions.

One particularly compelling demonstration highlighted YOLOv8n-SS’s superior performance in low-light environments that typically hinder detection accuracy. Where conventional models often missed pedestrians or mistakenly flagged non-human objects, the enhanced algorithm reliably identified all personnel in the scene. Similarly, in densely populated field operations, the model significantly curbed false detections caused by confusing items such as equipment, luggage, or vegetation. This refinement leads not only to improved safety outcomes but also reduces operational inefficiency stemming from unnecessary machine stoppages or false alarms.

An equally important achievement lies in the balance this model strikes between accuracy and computational demand. While many advanced detection techniques require prohibitively large processing resources, YOLOv8n-SS maintains high real-time capability suitable for embedded deployment on agricultural machinery and wearable devices. The algorithm achieves this efficiency through its carefully designed SPD-Conv and selective attention modules, which add minimal overhead yet yield substantial performance gains. This optimization makes it viable to deploy sophisticated pedestrian detection on devices with constrained hardware, extending the reach of intelligent safety measures across diverse agricultural settings.

The implications of this technology stretch beyond mere detection. By integrating YOLOv8n-SS into smart farming ecosystems, autonomous machinery can obtain continuous, precise awareness of human presence, enabling proactive collision avoidance and emergency responses. Systems can issue timely warnings or automatically halt operations if pedestrians enter hazardous zones, dramatically reducing accident risks. Moreover, this enhanced sensing capability supports the broader vision of “human-machine collaborative intelligence,” where agricultural robots and workers operate harmoniously, each complementing the other’s strengths.

Looking ahead, the research team plans further refinements focused on enhancing the model’s lightweight design to lower its computational footprint even further, facilitating deployment on increasingly compact devices. Additionally, integrating target-tracking algorithms is a promising next step, enabling not just detection but continuous monitoring of pedestrian behavior and movement trajectories over time. This longitudinal analysis would provide deeper insights into personnel dynamics, informing smart farm management decisions and operational planning.

This evolutionary path from mechanized agriculture to intelligent, interactive systems heralds a transformative era for farming worldwide. By harnessing cutting-edge deep learning techniques specially adapted to challenging field conditions, the study spearheaded by Associate Professor Yanfei Li and colleagues offers a practical roadmap to safer, more efficient, and more intelligent agricultural operations. As autonomous equipment proliferates, such innovations play a pivotal role in ensuring that human safety keeps pace with technological progress, ultimately helping to sustain the lifetime productivity of farms and the wellbeing of workers.

The convergence of computer vision advancements with real-world agricultural needs demonstrated by this work exemplifies the accelerating synergy between AI research and practical industry applications. The demonstrated increases in detection accuracy, combined with real-time responsiveness and computational efficiency, establish YOLOv8n-SS as a frontrunner model for next-generation pedestrian detection in smart farming scenarios. With continued development and adoption, this technology promises to become a fundamental component of future agricultural safety frameworks, pushing the boundaries of what smart machines can achieve in complex, real environments.

In summary, the innovative integration of SPD-Conv and selective kernel attention within the YOLOv8n framework marks a significant leap in pedestrian detection suited for autonomous agriculture. It addresses core challenges posed by low resolution, occlusion, and dense crowds while maintaining agility and speed essential for real-time deployment. This advancement aligns with broader agricultural digitalization trends, supporting safer, more intelligent, and more productive farming modalities. The work represents a crucial step forward in embedding nuanced human awareness into automated systems, thereby driving the evolution toward truly collaborative human-robot ecosystems on farms.

Subject of Research: Not applicable

Article Title: Improved method for a pedestrian detection model based on YOLO

News Publication Date: 6-May-2025

Web References: https://doi.org/10.15302/J-FASE-2025613

Image Credits: Yanfei LI, Chengyi DONG

Keywords: Agriculture

Tags: advancements in agricultural roboticsagricultural automation and worker safetyautonomous farming equipmentchallenges of computer vision in agriculturedetecting pedestrians in complex environmentsedge computing for agricultural applicationsinnovative research in agricultural technologypedestrian detection in agriculturereal-time safety systems for farmssafety challenges in modern farmingsmart agricultural technologyvisual detection algorithms for farmland