In the global agricultural landscape, apple cultivation holds a position of immense economic importance, yet it is consistently threatened by a variety of leaf diseases that can severely diminish crop yields. Among these, rust, powdery mildew, and brown spot emerge as predominant adversaries, each capable of inflicting significant damage to orchard productivity. Historically, disease diagnosis has hinged on the meticulous visual inspection performed by professional agronomists, who assess leaf morphology, color variations, and texture nuances to identify the presence and progression of illnesses. This manual process, however, is fraught with challenges—chiefly its labor-intensive nature, time consumption, and susceptibility to human error, especially when disease symptoms are subtle or in their nascent stages.

Advancements in machine learning have heralded a new era in automated plant disease detection, wherein algorithms analyze leaf images to pinpoint diseased regions and classify disease types with remarkable accuracy within controlled laboratory environments. Despite these successes, deploying such models in real-world, complex field conditions introduces new hurdles. Variability in lighting conditions, shadows, diverse backgrounds, and changes in camera angles introduce noise that readily confounds many conventional models, leading to degraded performance in practical applications. This technological gap has spurred investigative efforts to reconcile the twin priorities of achieving both “clear vision” — characterized by precise disease identification — and “fast computation” — enabling real-time analysis suitable for on-site usage.

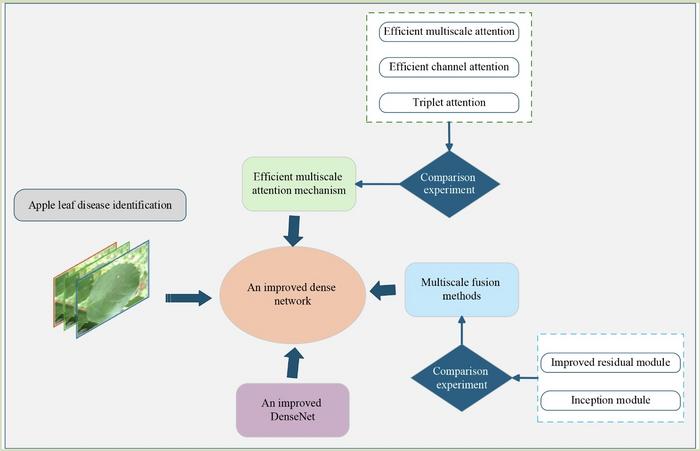

Tackling these challenges, a team led by Professor Hui Liu from the School of Traffic and Transportation Engineering at Central South University has engineered a sophisticated model named Incept_EMA_DenseNet. This novel approach integrates multi-scale feature extraction with an efficient attention mechanism, pushing the boundaries of automated disease recognition. The model achieves a remarkable accuracy rate of 96.76%, surpassing the performance of prevailing mainstream networks. The secret behind this leap lies in the fusion of multi-scale analysis—capturing both minute details and overarching lesion patterns—and the introduction of an innovative attention strategy that highlights disease-afflicted regions while suppressing irrelevant background information.

.adsslot_sCc3UNlo61{width:728px !important;height:90px !important;}

@media(max-width:1199px){ .adsslot_sCc3UNlo61{width:468px !important;height:60px !important;}

}

@media(max-width:767px){ .adsslot_sCc3UNlo61{width:320px !important;height:50px !important;}

}

ADVERTISEMENT

Traditional single-scale models often fall short because they cannot comprehensively capture the complex spatial hierarchies inherent in leaf disease manifestations. For example, rust is typified by distinctive yellow spots, whereas gray spot disease exhibits brown patches; both share similarities in local texture, yet their global distributions diverge significantly. The multi-scale fusion module embedded within the shallow layers of Incept_EMA_DenseNet addresses this by simultaneously attending to fine-grained textures and broader morphological characteristics, thereby enhancing the network’s discriminatory power.

Complementing this is the Efficient Multi-scale Attention (EMA) mechanism, a refined computational strategy that selectively weights disease-specific regions. This mechanism dynamically emphasizes critical pathological features—such as the dense accumulations of powdery substances in powdery mildew—while ignoring prolific healthy leaf areas that do not contribute to disease classification. Remarkably, EMA reduces computational complexity and network parameters by approximately 50% compared to conventional attention methods, all while boosting classification accuracy by 1.38%. This balance exemplifies true “intelligent focusing,” enabling models to be both lightweight and highly precise.

To further promote practical deployment, the research team optimized DenseNet_121, a widely respected convolutional neural network architecture, through a series of lightweight modifications tailored for field applications. This optimization ensures that the model runs efficiently on standard smartphones, empowering farmers to utilize ubiquitous mobile devices for immediate disease diagnosis. By simply photographing leaves using their phone cameras, non-expert users can access advanced diagnostic capabilities that previously required specialized laboratory equipment and expert assessments.

The validation of this groundbreaking technique was conducted on an extensive dataset comprising 15,000 images, carefully curated to reflect diverse real-world conditions. In rigorous mixed testing across eight common leaf diseases—including those with overlapping visual signatures such as brown spot and gray spot—and healthy leaves, Incept_EMA_DenseNet consistently produced accuracy rates exceeding 94%. The model demonstrated robust adaptability to fluctuating lighting environments and various camera perspectives, underscoring its readiness for practical in-field deployment.

Beyond its technical prowess, the implications of this technology for sustainable and responsible agriculture are profound. By enabling swift and accurate disease detection, it equips farmers with the capability to administer targeted treatments. This precision reduces the overuse of pesticides, mitigates unnecessary chemical exposure to the environment, and diminishes economic losses wrought by disease outbreaks. The accessibility and user-friendly nature of the system hold promise for widespread adoption, potentially transforming disease management practices in apple orchards worldwide.

The fusion of multi-scale feature analysis and an efficient attention mechanism marks a significant milestone in leveraging artificial intelligence for agricultural innovation. Furthermore, by seamlessly integrating advances in deep learning with practical constraints of field use, this research exemplifies how multidisciplinary expertise can converge to address enduring challenges in crop health monitoring. The team’s work, published in Frontiers of Agricultural Science and Engineering, stands as a compelling testament to the potential of AI-guided agronomy.

Looking ahead, further refinements may explore extending the model’s capabilities to other crops and diseases, expanding its utility across diverse agricultural contexts. Additionally, real-time deployment within mobile applications, supplemented by cloud-based updates and community-driven data sharing, could create an ecosystem of intelligent crop health monitoring accessible to farmers at all scales. As this technology matures, it promises not only to enhance yield security but also to pioneer a new paradigm of precision agriculture powered by artificial intelligence.

In conclusion, Professor Hui Liu’s research delineates a sophisticated path forward for automated plant disease diagnosis, blending computational innovation with tangible agricultural benefits. By achieving high accuracy through multi-scale fusion and efficient attention within a lightweight architecture, the Incept_EMA_DenseNet model redefines what is possible in mobile, field-based plant health monitoring. This advancement exemplifies the transformative potential at the intersection of AI, agriculture, and environmental stewardship.

Subject of Research: Not applicable

Article Title: An improved multiscale fusion dense network with efficient multiscale attention mechanism for apple leaf disease identification

News Publication Date: 6-May-2025

Web References:

https://doi.org/10.15302/J-FASE-2024583

Image Credits: Dandan DAI, Hui LIU

Keywords: Agriculture

Tags: advancements in agricultural technologyapple disease detectionattention mechanisms in agricultureautomated disease diagnosis systemschallenges in agricultural imagingenhancing crop yield through technologyleaf disease classification algorithmsmachine learning for plant healthmulti-scale feature analysisovercoming visual inspection limitationsreal-world application of AI in farmingrust and powdery mildew in apples